The AI Data Center Revolution: Infrastructure, Investment, and Innovation in 2025 - Nvidia, Arista, Cisco, IBM and more..

- BC

- Jun 10, 2025

- 6 min read

Updated: Jun 10, 2025

The artificial intelligence revolution is fundamentally reshaping the data center landscape, driving unprecedented levels of investment, construction, and technological innovation. As AI workloads become increasingly sophisticated and power-hungry, traditional data center infrastructure is being reimagined to meet the extraordinary demands of machine learning, large language models, and AI inference at scale.

The Scale of AI Data Center Investment

The numbers tell a compelling story of explosive growth. Microsoft alone expects to spend $80 billion in fiscal 2025 on AI-enabled data centers, with over half of that investment taking place in the United States. This represents just one company's commitment to AI infrastructure, highlighting the broader industry transformation underway.

Capital deployment in US data center construction reached $31.5 billion in 2024, driven primarily by AI demand. The construction boom shows no signs of slowing, with global data center construction currently at record levels, and all signals suggesting that AI demand will continue to build momentum in 2025.

The hyperscale cloud providers are leading this charge. Amazon Web Services, Microsoft Azure, and Google Cloud are investing heavily in AI-specific infrastructure, recognizing that the traditional data center model cannot adequately support the unique requirements of AI workloads. GPU cloud provider CoreWeave, for example, had a fleet of approximately 45,000 GPUs by July 2024 and aims to operate in 28 locations globally by the end of the year.

Current State of AI Data Centers in the US

The United States has emerged as the epicentre of AI data centre development. Among secondary markets, Houston, Southern California and Charlotte/Raleigh saw significant vacancy declines in 2024, while construction activity surged in Charlotte/Raleigh and Austin/San Antonio. These locations are being chosen strategically for their power availability, connectivity, and proximity to major metropolitan areas.

The demand for data centers in the United States is at an all-time high in 2025, driven by the rapid expansion of artificial intelligence (AI), cloud computing, and enterprise storage solutions. However, despite this massive construction effort, record construction volumes still cannot keep pace with demand for data center capacity.

The geographic distribution of AI data centers is being influenced by several factors beyond traditional considerations. Power grid capacity has become a critical constraint, with some regions experiencing delays in new construction due to electrical infrastructure limitations. Water availability for cooling systems is another emerging concern, particularly in arid regions that were previously attractive for data center development.

Beyond GPUs: The Critical Infrastructure Stack

While GPUs capture most of the attention in AI data center discussions, they represent only one component of a complex infrastructure ecosystem. The supporting hardware and systems are equally critical to successful AI operations and represent significant business opportunities for specialized suppliers.

Networking Infrastructure

AI workloads place extraordinary demands on network infrastructure. Unlike traditional data center applications, AI training and inference require massive amounts of data movement between compute nodes, creating bottlenecks that can severely impact performance. High-speed, low-latency networking becomes crucial for AI clusters where thousands of GPUs must communicate efficiently.

Arista Networks has positioned itself as a leader in AI data center networking, providing high-performance switches and routing solutions specifically designed for AI workloads. Their products enable the high-bandwidth, low-latency communication required for distributed AI training and inference. Other key networking suppliers include Cisco, Juniper Networks, and Mellanox (now part of NVIDIA), each offering specialized solutions for AI data center connectivity.

InfiniBand networking has become particularly important for AI applications, providing the ultra-low latency and high bandwidth required for GPU-to-GPU communication in large AI clusters. Ethernet-based solutions are also evolving rapidly, with 400G and 800G speeds becoming standard for AI data center deployments.

Advanced Cooling Systems

The cooling challenge in AI data centers represents perhaps the most significant departure from traditional data center design. AI workloads are driving a 160% projected increase in data center power use by 2030, with thermal design power (TDP) requirements of up to 1500W and beyond.

Traditional air cooling systems have reached their limits. Air cooling hit its limit around 2022, with consensus that it can deliver up to 80 Watts per cm2. This has driven innovation in liquid cooling technologies, with several approaches emerging:

Direct-to-Chip Cooling: Single-phase direct-to-chip (DtC) cooling brings water or fluid directly to cold plates that contact the processors directly. This approach can handle much higher power densities than air cooling while maintaining efficiency.

Immersion Cooling: Immersion cooling could become a greater cornerstone of data center operations beginning 2025, driven by the demands of AI infrastructure and stringent sustainability requirements. This technology submerges entire servers in specialized cooling fluids, enabling extremely high power densities while reducing energy consumption.

Two-Phase Cooling: As chip power densities continue to accelerate in 2025, two-phase liquid cooling will emerge as a key approach to enable data centers to stand up to the immense cooling demands of AI.

Key cooling technology suppliers include Vertiv, Schneider Electric, Asetek, LiquidCool Solutions, and Iceotope. Lenovo has been at the forefront of enabling efficient, high-power computing without compromise, pioneering Lenovo Neptune water cooling technology.

Power Infrastructure and Management

AI data centers require fundamentally different power infrastructure compared to traditional facilities. The power density requirements, combined with the need for extremely stable power delivery, create unique challenges. Uninterruptible Power Supply (UPS) systems must be sized not just for capacity but for the specific power characteristics of AI workloads.

Power distribution becomes critical at the rack level, where individual racks may consume 50-100kW or more. This requires specialized power distribution units (PDUs), busway systems, and electrical infrastructure capable of handling these extreme loads safely and efficiently.

Major power infrastructure suppliers include Eaton, Schneider Electric, Vertiv, and ABB, each developing AI-specific solutions for power distribution and management.

Storage and Memory Systems

AI workloads generate unique storage requirements that go beyond traditional enterprise storage needs. Training large language models requires access to massive datasets, while inference applications need extremely low-latency access to model parameters and real-time data.

High-performance storage solutions include NVMe flash arrays, parallel file systems, and specialized AI storage appliances. Companies like Pure Storage, NetApp, Dell Technologies, and VAST Data are developing storage solutions specifically optimized for AI workloads.

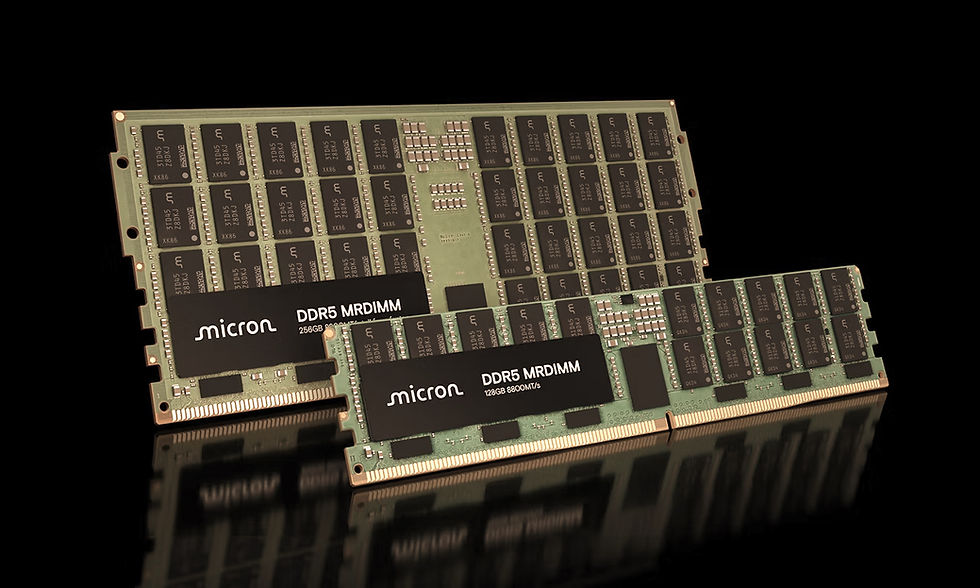

Memory systems also require special consideration, with high-bandwidth memory (HBM) becoming standard for AI accelerators. The memory hierarchy in AI data centers must be carefully designed to minimize data movement and maximize compute efficiency.

Specialized AI Hardware Beyond GPUs

While NVIDIA dominates the GPU market for AI training, the ecosystem includes numerous other specialized processors and accelerators:

AI Inference Chips: Companies like Intel (with their Habana processors), AMD, and various startups are developing processors specifically optimized for AI inference workloads, which have different characteristics than training workloads.

Custom ASICs: Major cloud providers are developing their own AI chips, including Google's TPUs, Amazon's Inferentia and Trainium chips, and Microsoft's upcoming AI accelerators.

Neuromorphic Processors: Emerging technologies that mimic brain architecture for ultra-efficient AI processing are beginning to appear in specialized applications.

Key Supplier Companies in the AI Data Center Ecosystem

The Path Forward: Challenges and Opportunities

The AI data center revolution is still in its early stages, with significant challenges and opportunities ahead. Five key forces will fundamentally reshape data center cooling in 2025: the mounting "heat crisis," supply chain reengineering, power availability, sustainability as a core business imperative, and the rise of specialized cooling solutions.

Power availability remains a critical constraint, with many regions experiencing delays in new data center construction due to electrical grid limitations. This is driving innovation in energy efficiency, alternative power sources, and grid-interactive technologies.

Sustainability concerns are also driving innovation, with companies seeking to minimize the environmental impact of AI data centers. Microsoft announced that all new data center designs going forward will utilize zero-waste water cooling systems, representing a significant commitment to sustainable operations.

The supply chain for AI data center components is also evolving rapidly, with traditional suppliers adapting their products and new companies emerging to address AI-specific requirements. This creates opportunities for innovation and competitive advantage across the entire ecosystem.

Conclusion

The AI data center revolution represents one of the most significant infrastructure buildouts in modern history. While GPUs capture headlines, the success of AI initiatives depends on a complex ecosystem of networking, cooling, power, storage, and facilities infrastructure. Companies that can successfully navigate this transformation and provide specialized solutions for AI workloads will be well-positioned to benefit from this multi-billion-dollar market expansion.

The investments being made today in AI data center infrastructure will define the competitive landscape for artificial intelligence for years to come. As the rapid advancement in semiconductor technology continues to drive performance improvements, the supporting infrastructure must evolve in parallel to unlock the full potential of AI technologies.

The United States currently leads in AI data center investment and construction, but this is a global phenomenon that will continue to reshape the technology landscape. Understanding the full scope of AI data center requirements – from GPUs to cooling systems to power infrastructure – is essential for anyone involved in the technology industry's future.

Which stocks do you own as your AI plays?

Check out my other blog posts here

Comments